Key takeaways:

- Any time you UPDATE or DELETE, you will leave a dead row behind (and potentially a dead index entry) that needs to be cleaned up later by some form of vacuum.

- When tables grow very large because of excessive dead tuples then performance will tend to decrease. Therefore the VACUUM process should never be avoided.

- It’s better to have a steady low-intensity vacuum work, using the autovacuum feature of the database, instead of disabling that feature and having to do that cleanup in larger blocks.

Understanding vacuum

This is an optional process. It is enabled by default using a parameter called autovacuum in postgresql.conf. This process automates the execution of vacuum and analyze tasks.

First, let’s understand vacuum. Assume that we delete a few records from a table. PostgreSQL does not immediately remove the deleted rows from the database. These are just marked as deleted. Similarly, when a tuple is updated, it’s equivalent to one delete plus one insert. The previous version of the record continues to be in the data file. Each update of a row generates a new version of the row. PostgreSQL introduce versions of a row because of the active transactions, which want to see the data as it was before. As a result of this activity, there will be a lot of unusable space in the data files. After some time, these dead records become irrelevant as there are no transactions still around to see the old data. However, because the space is not marked as reusable, inserts and updates happen in new pages of the database.

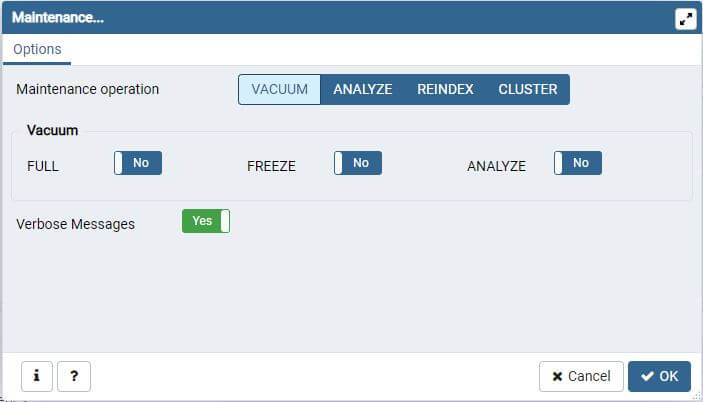

The vacuum process marks space as being available for reuse when it finds deleted or updated records within the table. There is a vacuum command to do this manually. Vacuum does not lock the table.

In contrast, VACUUM FULL, in addition to marking the space as reusable, removes the deleted or updated records and reorders the table data. This requires an exclusive lock on the table.

Autovacuum

Back in the early days of PostgreSQL projects, people had to run VACUUM manually. Nowadays, PostgreSQL users can rely on a tool called autovacuum, which is part of the PostgreSQL Server infrastructure.

Furthermore, if we execute vacuum with the “analyze” option, it will additionally read the records in the tables and generates statistics that will be later used by the query planner. Autovacuum automates the vacuum process. It’s recommended to have the autovacuum process do the cleanup of the data files unless there are specific reasons not to. In cases where the database is under heavy load for most part of the day, vacuum can be scheduled during off-peak hours. Although there are few or no deletes/updates in the cluster, it’s useful to have routine vacuuming as vacuum updates the data statistics used by the planner.

Vacuum full

Instead of normal VACUUM, you can also use VACUUM FULL. However, VACUUM FULL actually locks the table and rewrites the entire relation.

There are two major downsides to doing that. The first is that it’s very time and resource-intensive. VACUUM FULL is likely to take a very long time if your table is big, and during that time it will have an exclusive lock on the table. That’s a very bad combination. The second issue is index bloat, covered in more detail in the next section.

To alleviate the situation of VACUUM FULL, you can check out pg_squeeze which can rewrite a table without blocking writes.

Watching VACUUM at work

After this introduction, it is time to see VACUUM in action.

Usually, people are surprised to find that there is no reduction in disk space from using vacuum. VACUUM will not shrink your tables and disk space is usually not returned to the file system.

We will create a table filled with 100,000 rows and with customized autovacuum settings.

CREATE TABLE vacuum_test (id int) WITH (autovacuum_enabled = off);

INSERT INTO vacuum_test

SELECT * FROM generate_series(1, 100000);Note that it is possible to turn autovacuum off for specific tables. Usually, this is not a good idea for most applications. However, for testing purposes, VACUUM is turned off in this example to ensure that nothing happens in the background.

First, let’s check the size of the table by using the following command:

SELECT pg_size_pretty(pg_relation_size('vacuum_test'));pg_size_pretty

----------------

3544 kB

(1 row)The pg_relation_size command returns the size of a table in bytes. The pg_size_pretty command will take this number and turn it into something human-readable.

Updates/Deletes will actually copy the rows

Then, all rows in the table will be updated using a simple UPDATE statement:

UPDATE vacuum_test SET id = id + 1; What happens in the background is crucial in order to understand PostgreSQL. The UPDATE operation will copy all rows. Question is why would the database engine copy all the rows? First, we don’t know whether the transaction will be successful or not and therefore the data cannot be overwritten. Second, concurrent transactions might still be seeing the old version of the data.

Let’s see if the size of the table will be larger after the changes have been made:

SELECT pg_size_pretty(pg_relation_size('vacuum_test’)); pg_size_pretty

----------------

7080 kB

(1 row)After UPDATE, people might try to return space to the filesystem:

VACUUM vacuum_test; As stated before, in most cases VACUUM does not return space to the file system. Instead, it will allow space to be reused. The table, therefore, does not shrink at all:

SELECT pg_size_pretty(pg_relation_size('vacuum_test')); pg_size_pretty

----------------

7080 kB

(1 row)As a result, the next UPDATE will not make the table grow furthermore as it will use the free space inside the table. However, a second UPDATE might make the table grow again because all the space is gone and additional storage is needed:

test=# UPDATE vacuum_test SET id = id + 1;

UPDATE 100000

test=# SELECT pg_size_pretty(pg_relation_size('vacuum_test'));

pg_size_pretty

----------------

7080 kB

(1 row)

test=# UPDATE vacuum_test SET id = id + 1;

UPDATE 100000

test=# SELECT pg_size_pretty(pg_relation_size('vacuum_test'));

pg_size_pretty

----------------

10 MB

(1 row)Technically speaking, space left behind from deletions or updates of data is placed into a free space map (FSM) by VACUUM. At this point, new allocations are done from that free space first, rather than by allocating new disk for them instead.

The impact of this observation is really important to remember after reading this post. Understanding storage is key to performance and administration. There is no performance tuning without really understanding storage.

Configuring vacuum and autovacuum

Autovacuum takes care of cleanup works in the background. It wakes up once per minute as specified in autovacuum_naptime = 1 in postgresql.conf, and checks whether there is work to do. If there is work, autovacuum will fork up to three worker processes, as specified in autovacuum_max_workers in postgresql.conf.

Autovacuum trigger the creation of a worker process based on some parameters found in postgresql.conf:

autovacuum_vacuum_scale_factor = 0.2

autovacuum_vacuum_threshold = 50

autovacuum_analyze_scale_factor = 0.1

autovacuum_analyze_threshold = 50 The autovacuum_vacuum_scale_factor parameter tells PostgreSQL that a table is worth vacuuming if 20% of data has been changed. The problem is that if a table consists of one row, one change is already 100%. It makes no sense to fork a process to clean up just one row. Therefore, autovacuum_vacuuum_threshold says that we need 20% and this 20% must be at least 50 rows. Otherwise, VACUUM won’t start. The same idea applies when it comes to statistics creation. We need 10% and at least 50 rows to justify new stats for the optimizer. However, autovacuum should create new statistics during a normal VACUUM to avoid unnecessary trips to the table.

Limit the duration of a transaction

VACUUM will reclaim free space as needed. However, the main question is when can VACUUM actually clean out rows and turn them into free space? The rule is this: A row can be reclaimed if it cannot be seen anymore by any transaction. This means that a row that is no longer seen even by the oldest active transaction can be considered to be really dead.

Certainly, long transactions can postpone cleanup for quite some time. The consequence of this could be table bloat. Tables will grow very large and performance will tend to decrease. Fortunately, starting with PostgreSQL 9.6, the database has a nice feature called old_snapshot_threshold that allows the administrator to limit the duration of a transaction.

To limit the lifetime of snapshots, you can make use of old_snapshot_threshold setting in postgresql.conf.

old_snapshot_threshold = -1

# -1 disables

# 0 is immediate

# 1min-60dIf this variable is set, transactions will fail after a certain amount of time. By limiting the age of a transaction, we reduce the risk of missing vacuum processing. Note that this setting is at the database level.

Fighting table bloat

Table bloat is one of the most common issues when dealing with PostgreSQL. When we are facing bad performance, it is always a good idea to find out if there are objects that occupy a lot more space than they are supposed to.

How can we tell if table bloat is happening? We could check the pg_stat_user_tables view:

\d pg_stat_user_tablesView "pg_catalog.pg_stat_user_tables"

Column | Type | Collation | Nullable | Default

---------------------+--------------------------+-----------+----------+---------

relid | oid | | |

schemaname | name | | |

....

n_live_tup | bigint | | |

n_dead_tup | bigint | | |The n_live_tup and n_dead_tup fields gives us an impression of what is going on. And what can we do if there is table bloat? The first option is to run the VACUUM FULL command. But as discussed before, VACUUM FULL clause needs a table lock. On a large table, this can be a real problem because users cannot write to the table while it is being rewritten.

First, can you use CLUSTER to rebuild the table? It essentially makes a clean second copy of the table and then substitutes it for the original once finished.

Second, if you are using at least PostgreSQL 9.6, you can use a tool called pg_squeeze. It organizes a table behind the scenes without blocking. This is especially useful if you are reorganizing a huge table.

Common vacuum and autovacuum problems

In many of the situations where VACUUM need to happen, such as index bloat and excessive dead tuples, the work involved can be so intensive that you decide VACUUM needs to be avoided. This is actually the opposite of what should be concluded. In reality, the answer to most vacuum-related problems is to vacuum more often. This reduces the amount of work done by each individual vacuum, and it will keep table sizes from getting so big in the first place.

There are however a few known issues that you can run into with vacuum and autovacuum.

Autovacuum is constantly running

While autovacuum stays running all the time, it shouldn’t be doing active work all the time. If it is, there are two common issues to investigate.

First, each time vacuum runs out of its allocated space, it needs to start over. The allocated space could be set via maintenance_work_mem. If you have set this setting too low relative to the size needed to vacuum your tables, vacuum can run much less efficiently than it should. Therefore autovacuum will be running much more often just to keep up.

The second problem is having a large number of databases. autovacuum tries to start one worker on each database. Since a worker starts every 60 seconds as it is set by default in autovacuum_naptime seconds. If you have 60 databases, a new worker will be started every second with the default parameters. You probably need to increase the nap time value if you have more than a dozen databases in your cluster.

Autovacuum is too disruptive

During the execution of VACUUM and ANALYZE commands, the system maintains an internal counter that keeps track of the estimated cost of the various I/O operations that are performed. When the accumulated cost reaches a limit (specified by vacuum_cost_limit), the process performing the operation will sleep for a short period of time, as specified by vacuum_cost_delay. Then it will reset the counter and continue execution.

The intent of this feature is to allow users to reduce the I/O impact of these commands on database activity. When it seems you can’t run vacuum during your busy times, increasing the cost delay to make autovacuum’s actions less disruptive is the way to go.

Autovacuum doesn’t keep up

The opposite case to the previous is also possible. If you have a busy server, you can easily discover that autovacuum never quite keeps up with incoming traffic. Particularly if you have a system that’s capable of good I/O performance, you can drop this delay by setting zero to vacuum_cost_delay parameter. This disables the cost-based vacuum delay feature and gives autovacuum more opportunities to keep pace with your server.

Conclusion

Understanding storage is key to performance and administration. When we are facing bad performance, it is always a good idea to find out if there are objects that occupy a lot more space than they are supposed to.

Properly maintaining every aspect of a database is time-consuming work, but the downside of not doing it can be even worse. Without good vacuuming practice, where occupied storage can grow up over time, the system will need to perform expensive operations or downtime to fully recover from.